Over at the WAO blog, I’ve published a post about ambiguity and how it can be applied in work contexts.

You can access the post here.

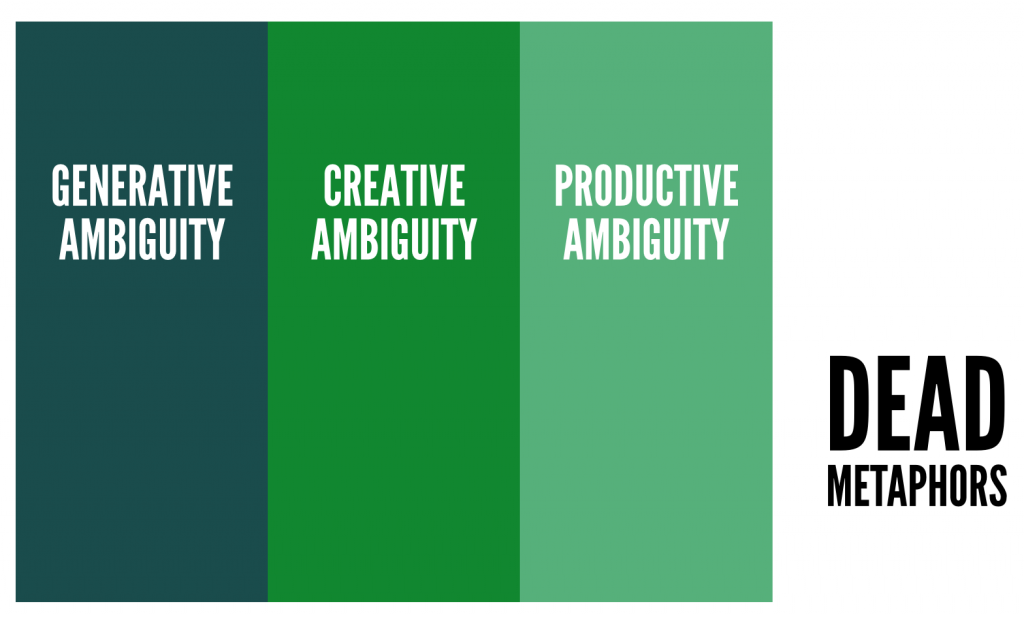

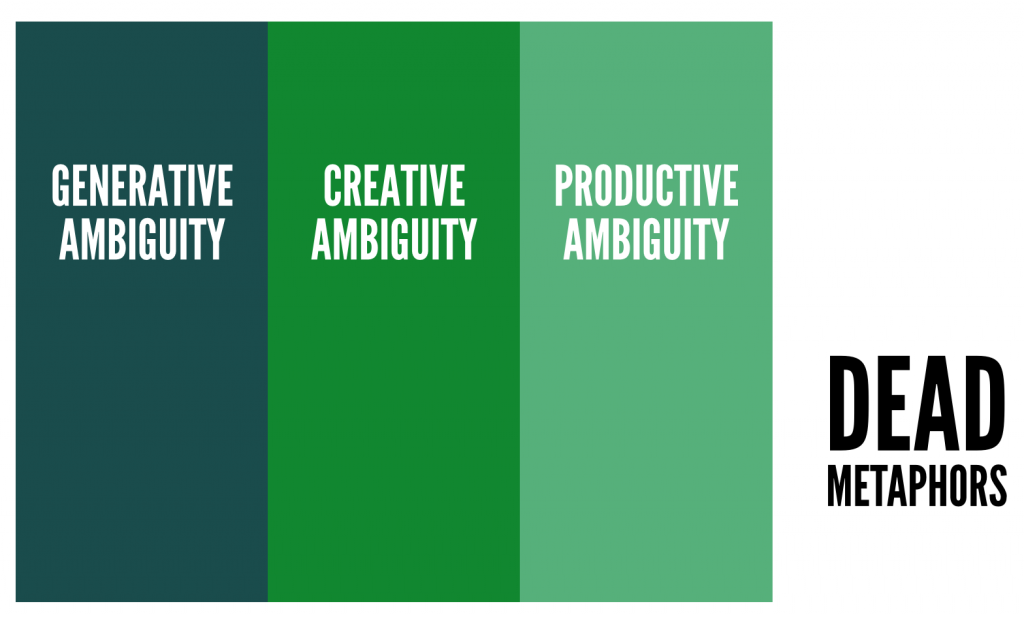

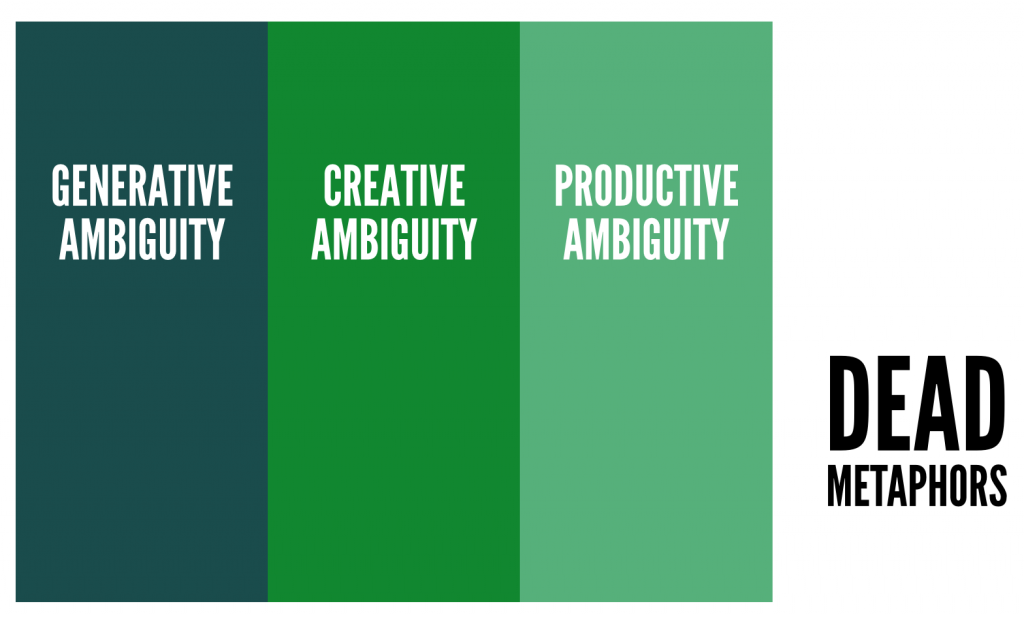

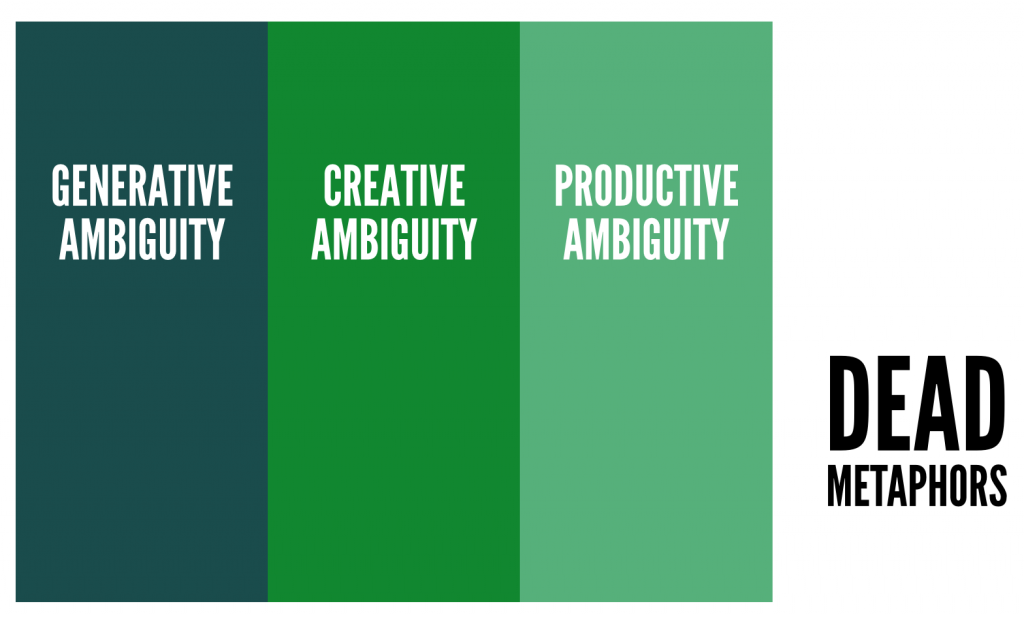

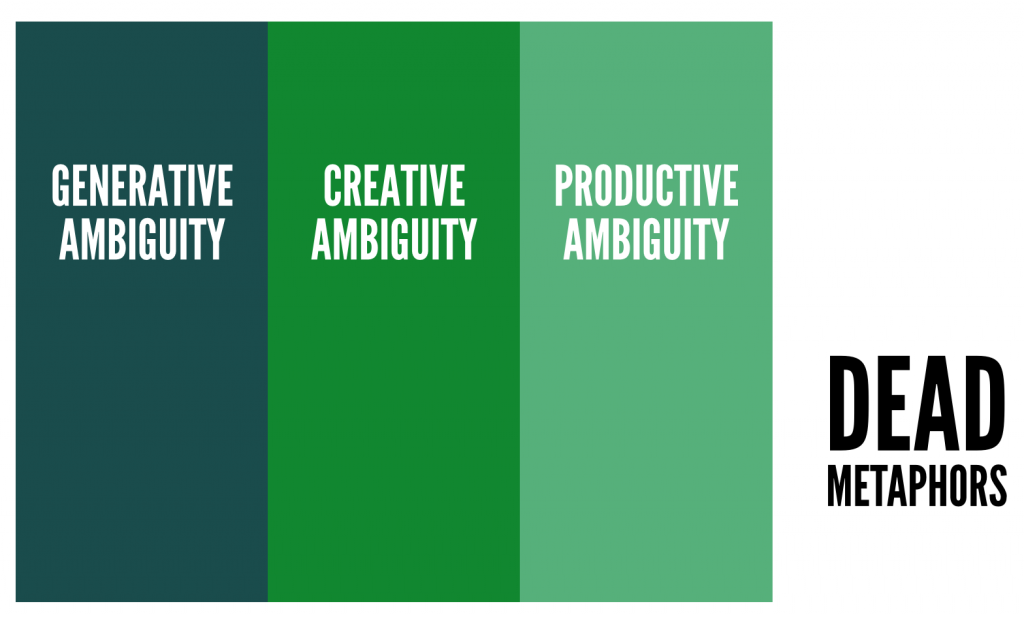

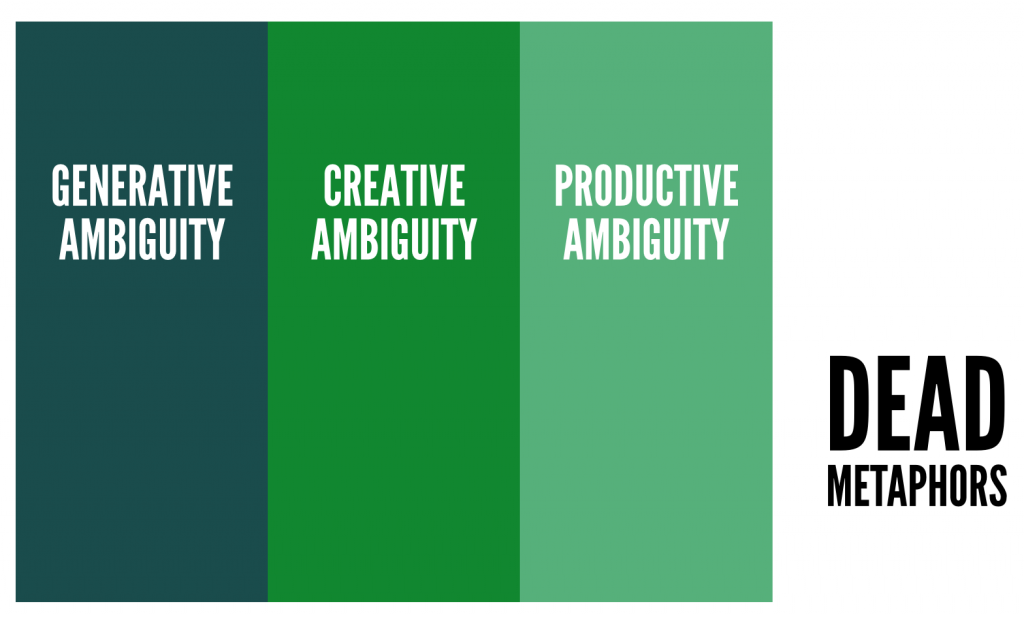

Introducing a continuum of ambiguity and reflecting on importance of avoiding dead metaphors in the workplace.

Over at the WAO blog, I’ve published a post about ambiguity and how it can be applied in work contexts.

You can access the post here.

After all, the most basic form of recognition is: “I see you.”

When most people think about earning a badge for something, they think about being recognised for that thing. This is correct: badges do in fact signify recognition.

However, most badges are issued for a subset of recognition practices, namely to indicate that something is in some way ‘valid’. For example:

Badges of this type are useful, particularly when thinking about people who are in transition. This usually happens when people are looking to land first job, being promoted at work, or looking to move on in their career.

To use my continuum of ambiguity, we can say that validity badges are ‘productively ambiguous’. That is to say that most people looking at the badge and its associated metadata will understand what it is for and how it is useful.

Further to the right, and off the end of the continuum is the danger zone of ‘dead metaphors’. In this context, that would apply to badges that validate skills we expect everyone to have.

I want to talk about badges for recognition which fall in the other two parts of the continuum: generative ambiguity and creative ambiguity. After all, we don’t only engage in recognition practices to validate other people’s skills.

Sometimes we recognise things such as:

In fact, sometimes it’s enough just to recognise somebody or some thing’s existence.

One dictionary definition I came across, among the many I looked at in preparation for this post, defined recognition as being an ‘uncountable noun’. In other words, recognition is a quantity which is measured in an undifferentiated unit, rather than being something which can be divided into discrete elements.

Let’s return to the two parts of the continuum of ambiguity that I want to discuss in relation to badges:

In my work on digital literacies, I often talk about power dynamics that are at play when people come up with definitions. By naming things we attempt to exert power. There is nothing inherently wrong with this, and productive ambiguity is how we get things done at scale.

But focusing on scale isn’t always useful. Our relationships with family, friends, and community members don’t always scale. This is why there’s been so much discussion of Dunbar’s Number over the last 10 years. There may well be a ‘cognitive limit’ to the number of people with whom we can maintain stable social relationships.

The continuum of ambiguity exists at the overlap of the connotative and denotative aspect of the words we use to convey meaning. If something denotes something else then it ‘stands’ for it. If something connotes something else then it is indicative of it.

The same is true of badges for recognition practices. Instead of only leaning towards ever more denotative badges, I want to suggest that we leave room for badges that connote relationships, potential, aspiration, and even existence. After all, the most basic form of recognition is: “I see you.”

A reminder to myself to be aware of the power of ambiguity within my own work and of the different forms it can take.

Ambiguity can often be caused through intentional or intentional use of zeugma and syllepsis:

In rhetoric, zeugma (/ˈzjuːɡmə/ (listen); from the Ancient Greek ζεῦγμα, zeûgma, lit. “a yoking together”[1]) and syllepsis (/sɪˈlɛpsɪs/; from the Ancient Greek σύλληψις, sullēpsis, lit. “a taking together”) are figures of speech in which a single phrase or word joins different parts of a sentence.

In my doctoral thesis, I noted that the words ‘digital’ and ‘literacy’ were combined to produce different kinds of ambiguity:

Zeugmas are figures of speech that join two or more parts of a sentence into a single noun, such as ‘digital literacy’. It is unclear here whether the emphasis is upon the ‘digital’ (and therefore an example of a prozeugma) or upon the ‘literacy’ (and therefore a hypozeugma). Which is the adjective?

Once you’ve spotted your first zeugma, you see them everywhere. They can be used to enlighten but also to deceive. I’m often a fan: terms where two words are ‘yoked together’ can be incredibly productive, leading to breakthroughs in groups that would otherwise be directionless.

The way this approach works is to play around with the boundary of what something denotes (i.e. represents) and what it connotes (i.e. implies).

Within this overlap are different types of ambiguity, which I usually represent with the continuum of ambiguity (below). This is explained in more detail within a paper I wrote with my thesis supervisor, but broadly speaking:

What’s interesting in my work is that I get to collaborate with quite a few different organisations, either as clients or as sister organisations working towards a shared goal.

There have been some interesting zeugmas that arise and do some important work at the level of Creative Ambiguity and Productive Ambiguity. For example: Cooperative Technologists [CoTech] where it’s often unclear as to whether we’re cooperative technologists or cooperative technologists. Is the emphasis on the former or the latter?

My experience is that while zeugmas open up space for productive discussion and action, the terms themselves are usually on a journey. They may move in a linear way from Generative through Creative, to Productive Ambiguity before becoming a Dead Metaphor. But more often, they oscillate between Creative Ambiguity and Productive Ambiguity, with the terms being reinvigorated every so often with new insights and impetus.

In general, this post on a oft-neglected blog is mostly a reminder to myself to be aware of the power of ambiguity within my own work and of the different forms it can take.

Vagueness is, of course, always to be avoided (and sits to the left of Generative Ambiguity) but it’s actually quite a rare thing in my world.

A story using characters to represent different parts of the continuum of ambiguity.

Imagine the situation: there’s a business meeting. I’ll leave it up to you as to whether this is an in-person meeting or a virtual one, but there are five people present:

These are all supposed to represent various parts of a continuum that I’ve used a lot on this blog. In fact, I’ve used this image in pretty much every post. However, what it doesn’t include is the area to the left of the continuum — thoughts, ideas, and utterances that are ‘vague’.

Back to the meeting, and Dave is speaking in clichés (aka ‘dead metaphors’) again. “What we need to do is synergise our verticals” he says. Parvati rolls her eyes; Dave’s been spending too much time on LinkedIn.

“Dave, I don’t think you understand our strategic business direction” says Vera. “We’re aiming to integrate new technologies to enable growth!” Everyone nods their heads, but no-one really understands what she means by this. “Have you got any ideas, Gerald?”

Looking uneasily around the room, Gerald utters a nervous little cough before saying, “well, I did have one idea, but I’m not sure if it’ll make much sense to you.” He looks out of the window, inhales deeply, and then spends the next few minutes outlining how he believes that web3 is like a cross between the Roman empire and freeform jazz. The rest of the meeting’s participants are utterly lost but try not to show it.

After a few awkward seconds of silence, Chitundu raises a finger and starts talking about a conference he went to recently. “At BizFest last year there was a speaker who was talking about the application of blockchain to verify core business processes,” he says. “What I liked about it was that he was really practical, but I can’t exactly remember what I thought we could use it for.”

Dave and Gerald are no longer concentrating and are instead checking their emails, but Vera chips in. “Yes, this is what I meant about integrating new technologies to enable growth!” Emboldened, Chitundu continues, “well you know how in the last annual report it showed we spend a lot of money on de-duplicating records? Perhaps we could use it for something to do with that?”

Suddenly, Pravati is drawing shapes connected with lines on a whiteboard. “Yes! She says, blockchain is basically a boring, back-office technology. So as long as we don’t store any sensitive data on there, we could use it to streamline our verification processes.” Vera’s eyes have glazed over slightly but she’s nodding.

Chitundu is excited. “I love this, and I think we should perhaps experiment with it a bit. How about a working group to explore some of Pravati’s ideas further?” Vera seems hesitantly accepting of the idea. She looks to Gerald, but he’s knee-deep in his inbox. She looks to Dave, who’s perked up since hearing the word ‘blockchain’. He’s nodding furiously.

“OK, Pravati could you have a look into this and then report back at our next meeting please?” says Vera. “Sure, I’d be happy to,” says Pravati, who’s been burned by Vera’s vague requests before. “I just need to ensure we’re agreed on some scope. I’d like to take a Wednesday afternoon to focus on this for the next three weeks, and pull in Keiko to double-check the technical side of things.”

“Alright, I’ll have to check with Keiko’s line manager, but that sounds fine,” says Vera. “Also, how would you like me to report back? In a report, with a slide deck, or something else?” asks Pravati. “Oh just some slides will be fine” says Vera.

As I argued in a paper I (self-)published with my thesis supervisor 11 years ago, if something is Vague, then according to the Oxford English Dictionary, it is “couched in general or indefinite terms” being “not definitely or precisely expressed”. In other words, as with the example of Vera above, the person expressing the idea doesn’t really know what they’re talking about.

When it comes to Generative ambiguity, the part of the continuum represented by Gerald, then an individual gives a name to a nebulous collection of thoughts and ideas. It might make some sense to them, given their own experiences, but it can’t really be conveyed well to others.

Creative ambiguity is where one aspect of a term is fixed, much in the way a plank of wood nailed to a wall would have 360-degrees of movement around a single point. Whilst a level of agreement can exist here, for example in the case of Chitundu talking about blockchain in a business context, it nevertheless remains highly contextual. It is dependent, to a great extent, upon what is left unsaid. A research project just on ‘blockchain’ in general would probably fail.

I would argue that Productive ambiguity is where real innovative work happens. This is the least ambiguous part of the continuum, an area in which more familiar types of ambiguity such as metaphor are used (either consciously or unconsciously) in definitions. The phrase “streamline our verification processes” in the example is productively ambiguous because it defines an area of enquiry without nailing it down too specifically.

Finally, we have Dead metaphors which happen when people want to remove all wiggle room from a term. Terms and the ideas behind them become formulaic and unproductive. They can, however, be resurrected through reformulation and redefinition. So when Dave in the story above talks in buzzwords he’s cribbed from his network without understanding what he means, he’s not really saying anything.

My reason for continually talking about ambiguity is that I believe there is a sweet spot in all areas of life. If an idea or concept being introducing doesn’t make sense to you, or if it makes sense only to you, then it needs more work. At the other end of the spectrum, if what’s being mentioned just feels clichéd and isn’t bringing any enlightenment, that’s no good either.

The interesting work happens when there’s an idea or concept that kind of makes sense to people with a similar backgrounds, experience, and/or interests. If it’s left there, though, it’s not useful enough. The idea or concept needs to be worked on further so that it can be applied more widely, so that others who don’t share that background, experience, or interest can see themselves in it.

I’d argue that this is what successful advertising and branding is. It’s how political slogans work. And, for the purposes of this post, it’s how things get done in a business setting.

When we’re doing new work, we tend to jump to conclusions and define things too quickly. This post outlines why that’s a bad idea, and what we can do to counteract these tendencies.

In his cheat sheet to cognitive biases, Buster Benson categorises the 200+ he identifies into three ‘conundrums’ that we all face:

One of the reasons that the Manifesto for Agile Software Development has been so impactful, even beyond the world of tech, is that it’s a form of granting permission. Instead of having to know everything up front and then embark on a small matter of programming, there is the recognition that meaning can accrete over time as systems develop.

It is therefore crucial to ensure that the project heads off on an appropriate trajectory. It’s also important that it can be nudged back on course should it stray from meeting the needs of users/participants/audience.

A tendency that I see with many innovation projects with which I’ve been involved is a lack of tolerance for ambiguity. By this I mean that because, as Benson notes, we never have enough time, “we jump to conclusions with what we have and move ahead”. In addition, because the world is a confusing place (especially when we’re doing new things!) “we filter lots of things out” and “create stories to make sense out of everything”.

It’s understandable that we do this, either consciously or unconsiously — and I’m certainly not immune from it! However, ever since studying ambiguity helped me with my doctoral thesis, I’ve been interested in how understanding different forms of ambiguity can help me in my work as a cooperator and consultant.

I’m going to discuss a Continuum of Ambiguity which I developed, based on the work of academics, and in particular Empson (1930), Robinson (1941) and Abbott (1997). I’m going to try and keep what follows as practical as possible, but for background reading you might find this article I wrote over a decade ago useful, or indeed Chapter 3 of my Essential Elements ebook, which is available here.

If we imagine ambiguity to be a continuum, then a lot of what happens with innovation projects happens at either end of the continuum. To the far left, things are left unhelpfully vague in a way that nobody really knows what’s going on. Anything and everything is up for grabs.

Alternatively, to the far right of the continuum, there’s a rush to nail everything down because this seems just like a project you’ve done before! Or there are massive time/cost pressures. Except of course, it isn’t just like that previous project, and by rushing you burn through even more time and money.

In my experience, what’s necessary is to sit with the ambiguity that every project entails: to understand what’s really going on, to look at things from many different angles, and ultimately, to shepherd the project into a part of the continuum I call ‘productive ambiguity’.

To define quickly the three parts of the Continuum of Ambiguity:

There was a time when ‘Uber for X’ was a popular way of getting funding. Without nailing down exactly what would happen or how it would work, the simplicity and game-changing approach that Uber took to booking a taxi could be applied to other areas or industries.

These days, ‘Uber for X’ is a dead metaphor as it’s been overused and is little better than a cliché. This is important to note, as an idea does not become (or remain) productively ambiguous without some work.

I’m working with the Bonfire team at the moment on the Zappa project. Unlike something such as Mastodon (“Twitter, but decentralised!”) or Pixelfed (“Instagram, but federated!”) the team hasn’t completely settled on a way of describing Bonfire which is productively ambiguous.

The tendency, which they are resisting nobly, is always to nail things down. Especially when you have funders. For example, it would be easy to decide in advance what is technically possible when attempting to counteract mis/disinformation in federated networks. Instead, because Bonfire is so flexible, they are sitting with the ambiguity and searching for use cases and metaphors which will help illuminate what might be useful.

One promising avenue, as well as doing the hard yards of user research and the synthesis of outputs this generates, is to use Marshall McLuhan’s notion of ‘tetrads’. The above example is taken from a post by Doc Searls in which he explicitly considers social media and what it improves, obsolesces, retrieves, and reverses.

There is no one framework or approach which can give the ‘truth’ of how a project should proceed, or what users want. Instead, by considering things from multiple angles, the overlap between desirable, technically possible, and needed by users comes into focus.

Instead of a conclusion, I will instead finish with an exhortation: sit with ambiguity! And while you’re sitting with it as a team, talk about it and resist the temptation to bring in dead metaphors. Instead of conceptualising the conversations you have about the project as “going round in circles” consider instead that it’s more likely that you are spiralling round an idea in an attempt to better understand and define it.

Given the news of the passing of Terry Jones, it seems appropriate to kick things off with one of my favourite parts of any Monty Python film:

Specifically:

ARTHUR: Who lives in that castle?

WOMAN: No one lives there.

ARTHUR: Then who is your lord?

WOMAN: We don’t have a lord.

ARTHUR: What?

DENNIS: I told you. We’re an anarcho-syndicalist commune. We take it in turns to act as a sort of executive officer for the week.

ARTHUR: Yes.

DENNIS: But all the decisions of that officer have to be ratified at a special biweekly meeting.

ARTHUR: Yes, I see.

DENNIS: By a simple majority in the case of purely internal affairs,–

ARTHUR: Be quiet!

DENNIS: –but by a two-thirds majority in the case of more–

ARTHUR: Be quiet! I order you to be quiet!

WOMAN: Order, eh — who does he think he is?

ARTHUR: I am your king!

WOMAN: Well, I didn’t vote for you.

ARTHUR: You don’t vote for kings.

Monty Python and the Holy Grail: Peasant Scene

Recently, I’ve become really interested in how decisions are made. Not personal decisions, such as “shall I change career?” or “who should I marry?”, but organisational decisions, such as “which project management tool should we use?” or “what should our strategy for the next three years be?”

As useful as they can be elsewhere in life, for this, things like The Decision Book don’t really cut it here. What we need is an approach or matrix; a way of deciding how, going into the situation, decisions are going to be made.

Related to this, I think, is the “default operating system” of hierarchy. I’ve cited elsewhere Richard D. Bartlett talking about the bad parts of hierarchy as being ultimately about what he calls “coercive power relationships”. In a hierarchy, people towards the bottom of the pyramid are being paid by the person (or people) at the top of the pyramid, so what they say, goes.

This means that, within a hierarchy, you’ve got a structure for the decision-making process, with power relationships between participants. And then, ultimately, however democratic the process purports to be, it’s ultimately the Highest Paid Person’s Opinion (HiPPO) that counts.

But what about in other situations, where the decision-making structure hasn’t been created? Who decides then?

For me, this isn’t an idle, theoretical question. I’ve seen the problems it can cause, especially around inaction. You can get so far by meeting up and having a big old discussion, but then how to you come to a binding decision? It’s tricky.

With non-hierarchical forms of organising, even getting into the decision-making process requires two things to happen first:

Let’s consider a fictional, but relatable, example. Imagine there’s a group of parents who have voluntarily decided to come together to raise money for their childrens’ sports team. They are not forming a company, non-profit, charity or any other form of organisation. They do not operate within a hierarchy. Nor have they decided how binding decisions can be made by the group.

Now let us imagine that this group of parents has to decide how best to raise money for the sports team. And once they’ve done that, they have to decide what to spend the money on. How do those decisions get made? What kinds of approaches work?

It is, of course, an absolute minefield, and perhaps why volunteering for these kind of roles seems to be on the decline. These situations can be particularly stressful without guidance or some kind of logical approach to non-hierarchical organising.

What has this got to do with ambiguity, and more specifically, the continuum of ambiguity shown above? I’d suggest that what is required in our fictional example is a way of organising that strikes a balance. In other words, one that that is Productively Ambiguous.

Hierarchies are a form of organising that can work well in many situations. For example, high-stakes situations, times when execution is more important than thought, and the military. For everything else, hierarchical organising can be a dead metaphor. It doesn’t represent how things are on the ground, and doesn’t allow any productive work to happen.

Imagine the situation if that volunteer group of parents decided to organise into a hierarchy. I should imagine they would spend more time thinking about and discussing power relationships and status than they would doing the work they’ve come together to achieve.

To the left of Productive Ambiguity lies creative ambiguity:

Whilst a level of consensus can exist within a given community within this Creative ambiguity part of the continuum, it nevertheless remains highly contextual. It is dependent, to a great extent, upon what is left unsaid – especially upon the unspoken assumptions about the “subsidiary complexities” that exist at the level of impression. The unknown element in the ambiguity (for example, time, area, or context) means that the term cannot ordinarily yet be operationalised within contexts other than communities who share prior understandings and unspoken assumptions.

Digital literacy, digital natives, and the continuum of ambiguity

Creative ambiguity relies on unspoken assumptions and previous tight bonds between people. This approach might work extremely well if, for example, the parents had themselves been part of a sports team together in their youth.

The chances are, however, that there would be at least a minority in the group who do not share this commonality. As a result, those unspoken assumptions would become a stumbling block and a barrier.

Far better, then, to focus on the area of productive ambiguity:

Terms within the Productive part of the ambiguity continuum have a stronger denotative element than in the Creative and Generative phases. Stability is achieved through alignment, often due to the pronouncement of an authoritative voice or outlet. This can take the form of a well-respected individual in a given field, government policy, or mass-media convergence on the meaning of a term. Such alignment allows a greater level of specificity, with rules, laws, formal processes and guidelines created as a result of the term’s operationalisation. Movement through the whole continuum is akin to a substance moving through the states of gas, liquid and solid. Generative ambiguity is akin to the ‘gaseous‘ phase, whilst Creative ambiguity is more of a ‘liquid‘ phase. The move to the ‘solid’ phase of Productive ambiguity comes through a process akin to a liquid ‘setting’.

Digital literacy, digital natives, and the continuum of ambiguity

Instead of hierarchy or unspoken assumptions, progress happens by following a path between over-specifying the approach, and allowing chaos to ensue.

In practice, this often happens by one or a small number of people exerting moral authority on the group. This occurs through, for example:

I have more to write on all of this at some point in the future, but I will leave it here for now. It’s interesting that this is at odds with the way that I see many attempts at decision-making happen – either inside or outside organisations…

There’s a couple of articles it might be worth reading to give some background to this post:

As I’ve argued many times over the last few years, ambiguity is really useful… until it isn’t. As soon as a concept becomes a dead metaphor it’s in trouble. We may be witnessing this with the term ‘Open Core’:

The open-core model is a business model for the monetization of commercially-produced open-source software. Coined by Andrew Lampitt in 2008, the open-core model primarily involves offering a “core” or feature-limited version of a software product as free and open-source software, while offering “commercial” versions or add-ons as proprietary software.

Wikipedia

Let’s zoom out and define our terms, as the above definition lumps together ‘Free Software’ (actually ‘Free Libre Open Source Software’, or FLOSS) and ‘Open Source Software’ (or OSS). All OSS is FLOSS but not all FLOSS is OSS. The open source part is a necessary, but not a sufficient, condition.

Free software… [FLOSS] is computer software distributed under terms that allow users to run the software for any purpose as well as to study, change, and distribute it and any adapted versions. [FLOSS] is a matter of liberty, not price: users—individually or in cooperation with computer programmers—are free to do what they want with their copies of a free software (including profiting from them) regardless of how much is paid to obtain the program. Computer programs are deemed free if they give users (not just the developer) ultimate control over the software and, subsequently, over their devices.

Wikipedia

FLOSS is as much as a political approach as it is a technological one. It’s pretty hardcore, and represents a positive (as opposed to a negative) form of liberty:

It is useful to think of the difference between the two concepts in terms of the difference between factors that are external and factors that are internal to the agent. While theorists of negative freedom are primarily interested in the degree to which individuals or groups suffer interference from external bodies, theorists of positive freedom are more attentive to the internal factors affecting the degree to which individuals or groups act autonomously.

Stanford Encyclopedia of Philosophy

That’s a good way to think about OSS, which is more concerned with rights and licenses, and allow collaboration to happen without interference:

Open-source software (OSS) is a type of computer software in which source code is released under a license in which the copyright holder grants users the rights to study, change, and distribute the software to anyone and for any purpose. [OSS] may be developed in a collaborative public manner. [OSS] is a prominent example of open collaboration.

Wikipedia

Because OSS focuses on the negative form of liberty, the temptation can be to find loopholes in it for commercial gain. This has happened with a bunch of companies using the Open Core model.

The definition of Free Software specifically allows software to be sold by anyone, for as much as they want. Open Core attempts to limit who can make money from software — which seems to be at odds not only with Free Software, but OSS too.

The concept of open-core software has proven to be controversial, as many developers do not consider the business model to be true open-source software. Despite this, open-core models are used by many open-source software companies.

Wikipedia

I’m uneasy with the Open Core approach, but can understand the pressure to apply the business model when a company has investors.

The reason why people don’t like the Open Core approach is that it doubles-down on the negative form of liberty, turning Open Source into a dead metaphor. It’s extractive, and focused on making capitalists richer instead of the commons.

Consultants like me are sometimes engaged by clients on a very short-term basis, and sometimes embedded inside organisations for much longer periods of time. Yesterday, I started a period of ’embedding’, this time with Moodle.

While I’ll discuss elsewhere the things I’ll be working on over the coming weeks and months, in this post I want to consider what it’s like to start working with a new organisation from a philosophical perspective.

The areas of enquiry represented by what we call ‘Philosophy’ can be sub-divided in many ways and, of course, people differ as to how this should be done. When I think about Philosophy, over and above the (valuable) ‘history of ideas’ courses taught to undergraduates, I tend to use the following buckets:

I’m sure some people reading this may disagree with these simple definitions, and with separating out metaphysics and ontology, but hopefully you’ll see why I tend to do this in a moment.

When a person joins a new organisation, there’s often a mad rush to get them ‘up-to-speed’ as quickly as possible. Not one minute should be wasted to ensure that they can reach full operating efficiency as soon as possible. Taken to its logical conclusion, I’m sure there are plenty of organisations that would like to be be able to send the required knowledge directly into a new employee’s brain, Matrix-style.

I was ‘onboarded’ by Moodle HR yesterday and, while they can improve the experience, it was the first time an organisation has actually set out its information landscape. It sounds like such a simple thing, but this ontology of the organisation as it sees itself, is a hugely valuable thing to share. After all, the inverse of this — finding out about things in a piecemeal way — can be rather anxiety-inducing.

In the past, I’ve joined organisations that explicitly don’t share things like org charts and what technologies they use. The reason given for this is often that such documents would be ‘out of date as soon as they’re created’, but in reality it’s usually because there’s a huge disconnect between different parts of the organisation. There is no map or shared reality.

Ontology is an easy one for organisations to focus upon. They can point to things and draw employees’ attention to them, even if it’s just directing people to a URL or a particular app. What’s harder is getting to the other three: the epistemology, ethics, and metaphysics of an organisation.

In an organisational context, the question of ethics isn’t solved simply by having a mission or a values statement. It has to be lived and demonstrated. There are large, organisation-wide ethical issues that can only really be solved by the leadership team. Examples of these might include the type of investment that the organisation takes, diversity issues in hiring, or the way it interacts with the natural environment.

As well as these large, organisation-wide issues, there are also much smaller, everyday ethical issues. In fact, some of these might not even be put under a banner of ‘ethics’ by most people. For example, I’d include in this list of smaller ethical issues things like the amount to which line managers and senior management ‘check up’ on employees.

“If you don’t have your own time, then you have no control of your day. And if you have no control of your day then you end up working longer than you should.” (Jason Fried)

These also include the way in which members of the organisation interact with one another. A lot of this would perhaps traditionally go under ‘culture’ or perhaps ‘etiquette’ but, actually, I think considering this as part of the wider ethics of an organisation is a better way to think about it.

It obviously takes time to figure out the lived reality of ethics within an organisation. The same is even more true of its epistemology and metaphysics. We’re going a stage deeper here.

You can see the ontology of an organisation; you can point to different things that exist. To a great extent you can see the outputs of the ethics of an organisation; you can point to the outputs it creates. When it comes to epistemology and metaphysics, however, we’re in a less tangible realm: what can we know? what else exists?

It takes a while to understand the epistemology of an organisation. A new employee (or contractor) isn’t going to be able to figure out an organisation’s approach to the above questions in the first few days, or even weeks, after joining. Again, a lot of these issues are lumped within the rather unhelpful category of ‘culture’.

Epistemological questions are particularly interesting for organisations that deal primarily in bits and bytes and digital ‘stuff’ that can affect people’s lives in a material way. The frontiers offline are physical, whereas the frontiers online are conceptual.

Consequently, when organisations ask ‘what can we know?’ it’s a collection of individuals with hopes, dreams, and inbuilt-biases making value judgements. More than that, it’s a collection of people responding to a particular set of pressures affecting them individually and corporately, and coming to collective epistemological decisions.

I’d include examples such as Facebook’s algorithmic approach to matching ‘people you may know’ here, as well as edtech companies gathering brainwave data to measure ‘student engagement’. As the Contrafabulists show with their work around predictions, when you say that the world is, or will be, a certain way, you’re revealing your epistemology.

Metaphysics is a harder thing to pin down, and perhaps the most difficult thing for an organisation to access directly. Some schools of philosophy, in fact, believe that metaphysics is meaningless and not worth studying. I disagree.

If we conceptualise metaphysics as asking the question what else exists? then we can see that this is the kind of question that can drive organisations forward and help them to improve. This is particularly important for organisations who create digital products and services, as they can literally invent these from ones and zeroes.

Martin Dougiamas, CEO of Moodle, shared with me a book called Reinventing Organizations that’s inspired him recently. Although I’m yet to read it, even the book’s website gives an example of the kind of thing I mean when talking about organisational metaphysics. There’s a ‘pay-what-feels-right’ option for the book, instead of a single price. If we step back and think about it for a moment, this is an acknowledgement that the full value of something like a book can’t be captured in a financial transaction. It also makes us question what a ‘book’ actually is when it’s digital and the distribution value drops close to zero.

I haven’t finished thinking about this philosophical approach to joining organisations. No doubt, any comments I receive below and on various networks of which I’m part will help inform my thinking. As, of course, will my experiences as I spend more time with Moodle.

The important thing for me is to realise that when you’re joining an organisation, what you’re doing is plugging yourself (a complex mixture of thoughts, emotions, and biases) into something that isn’t necessarily an easy thing to understand. To hurry and try and ‘get-up-to-speed’ quickly, therefore, might actually waste more time than it saves.

In this week’s BBC Radio 4 programme Thinking Allowed, there’s an important part about ambiguity:

Laurie Taylor explores the origins and purpose of ‘Business Bullshit’, a term coined by Andre Spicer, Professor of Organizational Behaviour at Cass Business School, City University of London and the author of a new book looking at corporate jargon. Why are our organisations flooded with empty talk, injuncting us to “go forward” to lands of “deliverables,” stopping off on the “journey” to “drill down” into “best practice.”? How did this speech spread across the working landscape and what are its harmful consequences? They’re joined by Margaret Haffernan, an entrepreneur, writer and keynote speaker and by Jonathan Hopkin, Associate Professor of Comparative Politics at the LSE.

The particular part is the second section of the programme, in which Margaret Haffernan explains that organisations attempt (in vain) to eliminate ambiguity. As such, they play a constant game of inventing new terms and initiatives, which not only work no better than the previous ones, but serve to justify inflated salaries.

The episode is available online here.

There’s an ongoing flamewar between traditionalists and progressives, who believe that education should either be about ‘knowledge’ or about ‘skills’. This has been going on, in various forms, at least since Thomas Henry Huxley and Matthew Arnold squared off in the 19th century about what kind of education is required to foster ‘true culture’.

As Bruce Chatwin demonstrates in his modern-day classic The Songlines, there are ways of knowing that are based on action rather than ‘head knowledge’. He details how Australian aboriginal ‘knowledge’ is interwoven with their physical environment, is passed on primarily in an oral way, and comes with certain prohibitions as to who is allowed to ‘have’ such knowledge.

The Internet Encylopedia of Philosophy’s entry on knowledge lists four main types:

I’ve always been of the opinion that the the second type of knowledge listed here, knowledge ‘that’, is of limited value. If I was coming up with my own personal hierarchy of the relative importance of these kinds of knowledge, I’d put this one at the bottom. It’s the kind of knowledge that may be foundational, but taken to absurd lengths, just means you’re good at pub quizzes.

For me, it’s knowing ‘how’ that’s of central importance, and what we should focus on in education. From the IEP’s entry on knowledge, citing the celebrate ‘ordinary language’ philosopher Gilbert Ryle:

What Ryle meant by ‘knowing how’ was one’s knowing how to do something: knowing how to read the time on a clock, knowing how to call a friend, knowing how to cook a particular meal, and so forth. These seem to be skills or at least abilities.

This is why I think that ‘knowledge’ vs. ‘skills’ is a false dichotomy. The article continues:

Are they not simply another form of knowledge-that? Ryle argued for their distinctness from knowledge-that; and often knowledge-how is termed ‘practical knowledge’. Is one’s knowing how to cook a particular meal really only one’s knowing a lot of truths — having much knowledge-that — bearing upon ingredients, combinations, timing, and the like?

Going back to the aboriginal example, this is where ‘knowledge’ that can’t be tested using a pencil-and-paper examination comes in. Knowing ‘how’ is usually described as a set of ‘skills’ in our culture, labelled as ‘vocational’, and given a back seat to the ‘more important’, ‘academic’ forms of knowledge. I think this is incorrect and should be remedied as soon as possible.

If Ryle was right, knowing-how is somehow distinct: even if it involves having relevant knowledge-that, it is also something more — so that what makes it knowledge-how need not be knowledge-that… Might knowledge-that even be a kind of knowledge-how itself, so that all instances of knowledge-that themselves are skills or abilities?

While reading to my six year-old daughter last night, the word ‘instinctively’ was used by the author. We had a brief conversation about it, which revealed that, even at her young age, she understands the difference between the knowledge ‘that’ which is acceptable at school, versus the knowing ‘how’ which is valuable currency at home. In other words, she’s playing the game.